Aligning adult mouse coronal brain sections with the Allen Brain Altas

In this notebook, we will align a MERFISH dataset of an adult mouse coronal brain section to the Allen Brain Atlas. You can download additional MERFISH datasets and find more details about this data here: https://info.vizgen.com/mouse-brain-data

We will use STalign to achieve this alignment. We will first load the relevant code libraries.

[1]:

#Import dependencies

import numpy as np

#%matplotlib notebook

import matplotlib.pyplot as plt

import pandas as pd # for csv.

from matplotlib import cm

from matplotlib.lines import Line2D

import os

from os.path import exists,split,join,splitext

from os import makedirs

import glob

import requests

from collections import defaultdict

import nrrd

import torch

from torch.nn.functional import grid_sample

import tornado

import copy

import skimage

from mpl_toolkits.mplot3d.art3d import Poly3DCollection

import pandas as pd

[ ]:

# OPTION A: import STalign after pip or pipenv install

from STalign import STalign

[2]:

## OPTION B: skip cell if installed STalign with pip or pipenv

import sys

sys.path.append("../../STalign")

## import STalign from upper directory

import STalign

We have already downloaded MERFISH datasets (link found above) and stored them in a folder called merfish_data

Now, we can read in the cell infomation using pandas as pd.

We must load all cell x and y positions into the variable x and y respectively. Importantly, the scale of the loaded data must approximately match the Allen Brain Atlas, where ~1 pixel unit is ~1 um

[3]:

#Load file

filename = '__'

df = pd.read_csv('../merfish_data/s1r1_metadata.csv.gz')

#Load x position

x = np.array(df['center_x']) #change to x positions of cells

#Load y position

y = np.array(df['center_y']) #change to column y positions of cells

[4]:

dx=10

blur = 1

Now we can load in data from the Allen Brain Altas.

We need to load in and save files containing the ontology of regions, a 3D cell density atlas, and annotations of brain regions in the aforementioned 3D atlas.

Depending on which Allen Atlas you would like to use, you can change the url that you download these files from. In this example, we are aligning the adult mouse (P56) Allen Atlas.

[5]:

url = 'http://api.brain-map.org/api/v2/data/query.csv?criteria=model::Structure,rma::criteria,[ontology_id$eq1],rma::options[order$eq%27structures.graph_order%27][num_rows$eqall]'

ontology_name,namesdict = STalign.download_aba_ontology(url, 'allen_ontology.csv') #url for adult mouse

<Response [200]>

[6]:

imageurl = 'http://download.alleninstitute.org/informatics-archive/current-release/mouse_ccf/ara_nissl/ara_nissl_50.nrrd'

labelurl = 'http://download.alleninstitute.org/informatics-archive/current-release/mouse_ccf/annotation/ccf_2017/annotation_50.nrrd'

imagefile, labelfile = STalign.download_aba_image_labels(imageurl, labelurl, 'aba_nissl.nrrd', 'aba_annotation.nrrd')

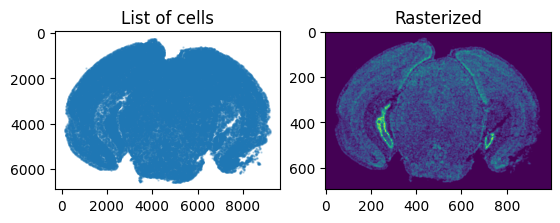

Our first step in this alignment will be to rasterize the single cell centroid positions into an image. This converts our x and y arrays of cells into a pixelated image. For this example, we choose to rasterize at a resolution of 10 (um).

[7]:

#Rasterize Image

X_,Y_,W = STalign.rasterize(x,y,dx=dx, blur = blur,draw=False)

We can visualize the resulting rasterized image.

[8]:

#Plot unrasterized/rasterized images

fig,ax = plt.subplots(1,2)

ax[0].scatter(x,y,s=0.5,alpha=0.25)

ax[0].invert_yaxis()

ax[0].set_title('List of cells')

ax[0].set_aspect('equal')

W = W[0]

extent = (X_[0],X_[-1],Y_[0],Y_[-1])

ax[1].imshow(W, origin='lower')

ax[1].invert_yaxis()

ax[1].set_title('Rasterized')

# save figure

#fig.canvas.draw()

#fig.savefig(outname[:-4]+'_image.png')

[8]:

Text(0.5, 1.0, 'Rasterized')

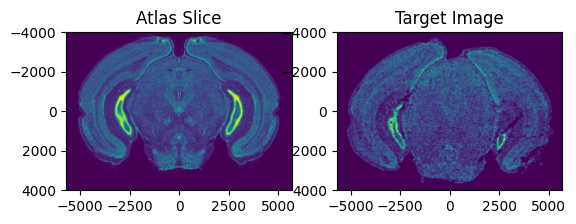

Now we need to find an approximate slice number in the Allen Atlas to initialize the alignment. Evaluate whether the value of slice is similar to the target image by viewing a side by side comparison of the ABA slice and the target image. If not, change the value of slice. In this example, slice = 0 is anterior and slice = 264 is posterior.

[9]:

#find slice

#peruse through images in atlas

# Loading the atlas

slice = 177

vol,hdr = nrrd.read(imagefile)

A = vol

vol,hdr = nrrd.read(labelfile)

L = vol

dxA = np.diag(hdr['space directions'])

nxA = A.shape

xA = [np.arange(n)*d - (n-1)*d/2.0 for n,d in zip(nxA,dxA)]

XA = np.meshgrid(*xA,indexing='ij')

fig,ax = plt.subplots(1,2)

extentA = STalign.extent_from_x(xA[1:])

ax[0].imshow(A[slice],extent=extentA)

ax[0].set_title('Atlas Slice')

ax[1].imshow(W,extent=extentA)

ax[1].set_title('Target Image')

fig.canvas.draw()

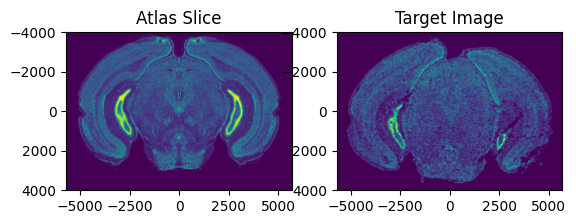

Next, we need to find an approximate rotation angle of the Allen Atlas to initialize the alignment. Evaluate whether the rotation of the atlas is similar to the target image by viewing a side by side comparison of the ABA slice and the target image. If not, change the value of theta_deg. Note: the rotation here is defined in degrees.

[10]:

from scipy.ndimage import rotate

theta_deg = 0

fig,ax = plt.subplots(1,2)

extentA = STalign.extent_from_x(xA[1:])

ax[0].imshow(rotate(A[slice], angle=theta_deg),extent=extentA)

ax[0].set_title('Atlas Slice')

ax[1].imshow(W,extent=extentA)

ax[1].set_title('Target Image')

fig.canvas.draw()

Next, specify if there are any points on the image above that can be approximately matched with eachother and add them in the following format. This helps speed up alignment. (OPTIONAL)

[11]:

points_atlas = np.array([[0,2580]])

points_target = np.array([[8,2533]])

Li,Ti = STalign.L_T_from_points(points_atlas,points_target)

We can define atlas and target points, xI and xJ, as well as atlas and target images, I and J.

[12]:

xJ = [Y_,X_]

J = W[None]/np.mean(np.abs(W))

xI = xA

I = A[None] / np.mean(np.abs(A),keepdims=True)

I = np.concatenate((I,(I-np.mean(I))**2))

Now that we can chosen the slice number and rotation angle, we are ready to initialize parameters related to our alignment.

[13]:

sigmaA = 2 #standard deviation of artifact intensities

sigmaB = 2 #standard deviation of background intensities

sigmaM = 2 #standard deviation of matching tissue intenities

muA = torch.tensor([3,3,3],device='cpu') #average of artifact intensities

muB = torch.tensor([0,0,0],device='cpu') #average of background intensities

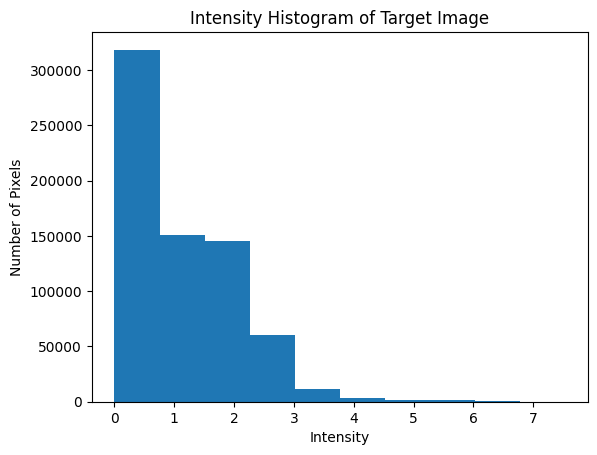

We can change the parameters above by looking at the intensity histogram of our target image (below). We need to consider intensities of artifacts (tissue that is present in the target image and absent in the atlas), which is usually in the upper range of intensity values. We also need to find the intensity of the background values that does not correspond to any tissue, usually around 0, and standard deviations for these values. The matching tissue intensities are regions that should have tissue that can be aligned in both the atlas and the target image.

[14]:

fig,ax = plt.subplots()

ax.hist(J.ravel())

plt.xlabel('Intensity')

plt.ylabel('Number of Pixels')

plt.title('Intensity Histogram of Target Image')

[14]:

Text(0.5, 1.0, 'Intensity Histogram of Target Image')

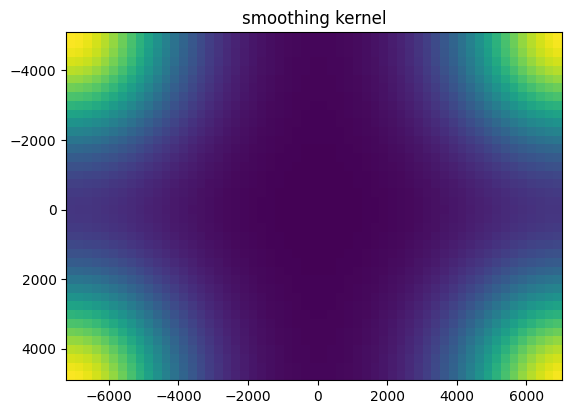

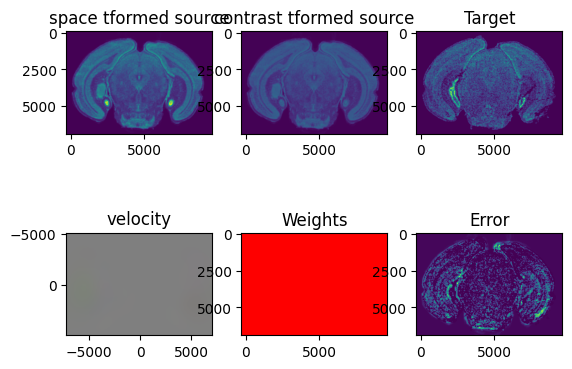

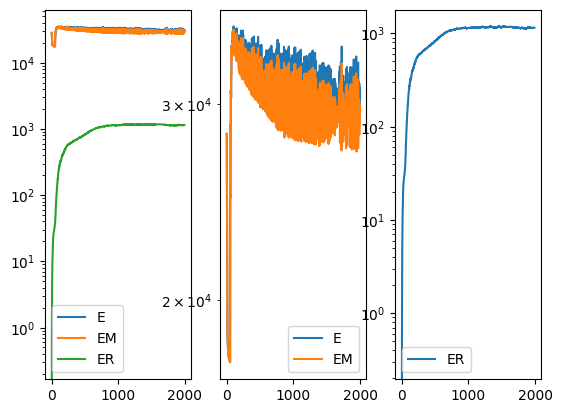

The following parameters vary depending on your target image and the duration & accuracy of the alignment. The scale parameters refer to a scaling of the atlas in (x, y, z) to match the size of the target image. The nt parameter ensures smoothness and invertibility of the image transformation. The niter refers to the number of iteration steps in gradient descent to achieve minimum error between the altas and the target image.

[15]:

# initialize variables

scale_x = 0.9 #default = 0.9

scale_y = 0.9 #default = 0.9

scale_z = 0.9 #default = 0.9

theta0 = (np.pi/180)*theta_deg

# get an initial guess

if 'Ti' in locals():

T = np.array([-xI[0][slice],np.mean(xJ[0])-(Ti[0]*scale_y),np.mean(xJ[1])-(Ti[1]*scale_x)])

else:

T = np.array([-xI[0][slice],np.mean(xJ[0]),np.mean(xJ[1])])

scale_atlas = np.array([[scale_z,0,0],

[0,scale_x,0],

[0,0,scale_y]])

L = np.array([[1.0,0.0,0.0],

[0.0,np.cos(theta0),-np.sin(theta0)],

[0.0,np.sin(theta0),np.cos(theta0)]])

L = np.matmul(L,scale_atlas)#np.identity(3)

Now, we can perform alignment of the atlas to our target slice.

[16]:

%%time

# run LDDMM

# specify device (default device for STalign.LDDMM is cpu)

if torch.cuda.is_available():

device = 'cuda:0'

else:

device = 'cpu'

#returns mat = affine transform, v = velocity, xv = pixel locations of velocity points

transform = STalign.LDDMM_3D_to_slice(

xI,I,xJ,J,

T=T,L=L,

nt=4,niter=2000,

device='cpu',

sigmaA = sigmaA, #standard deviation of artifact intensities

sigmaB = sigmaB, #standard deviation of background intensities

sigmaM = sigmaM, #standard deviation of matching tissue intenities

muA = muA, #average of artifact intensities

muB = muB #average of background intensities

)

/Users/kalenclifton/.local/share/virtualenvs/STalign-wXTCUYXW/lib/python3.10/site-packages/torch/functional.py:504: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at /Users/runner/work/pytorch/pytorch/pytorch/aten/src/ATen/native/TensorShape.cpp:3527.)

return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined]

/Users/kalenclifton/STalign/docs/notebooks/../../STalign/STalign.py:1616: UserWarning: Data has no positive values, and therefore cannot be log-scaled.

axE[2].set_yscale('log')

CPU times: user 32min 16s, sys: 17min 22s, total: 49min 39s

Wall time: 24min 32s

[17]:

A = transform['A']

v = transform['v']

xv = transform['xv']

Xs = transform['Xs']

Once we have aligned the atlas to our target image, we can project the brain regions defined in the atlas to our target image and export these annotations, along with information about the positions of the atlas that align with the slice.

[18]:

df = STalign.analyze3Dalign(labelfile, xv,v,A, xJ, dx, scale_x=scale_x, scale_y=scale_y,x=x,y=y, X_=X_, Y_=Y_, namesdict=namesdict,device='cpu')

/Users/kalenclifton/STalign/docs/notebooks/../../STalign/STalign.py:1725: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

A = torch.tensor(A)

/Users/kalenclifton/STalign/docs/notebooks/../../STalign/STalign.py:1726: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

v = torch.tensor(v)

/Users/kalenclifton/STalign/docs/notebooks/../../STalign/STalign.py:1738: UserWarning: To copy construct from a tensor, it is recommended to use sourceTensor.clone().detach() or sourceTensor.clone().detach().requires_grad_(True), rather than torch.tensor(sourceTensor).

XJ = torch.tensor(XJ)

[19]:

df

[19]:

| coord0 | coord1 | coord2 | x | y | struct_id | acronym | |

|---|---|---|---|---|---|---|---|

| 0 | 2400.920672 | 590.914510 | -5191.190533 | 156.563284 | 4271.326432 | 0 | bg |

| 1 | 2400.787913 | 579.836458 | -5191.778278 | 156.509284 | 4256.962431 | 0 | bg |

| 2 | 2400.392034 | 546.602489 | -5193.539680 | 159.965284 | 4228.180431 | 0 | bg |

| 3 | 2401.540063 | 656.669570 | -5175.261835 | 167.579284 | 4323.868433 | 0 | bg |

| 4 | 2401.455700 | 635.227030 | -5188.836504 | 160.559284 | 4308.802433 | 0 | bg |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 78324 | 2381.469307 | 538.424275 | 5210.772240 | 9154.007886 | 4445.528506 | 0 | bg |

| 78325 | 2380.424358 | 517.317267 | 5138.578401 | 9088.829884 | 4423.712505 | 0 | bg |

| 78326 | 2381.278031 | 515.380913 | 5231.900009 | 9170.261887 | 4431.758506 | 0 | bg |

| 78327 | 2380.219083 | 506.093842 | 5137.498692 | 9086.183884 | 4417.016505 | 0 | bg |

| 78328 | 2380.015031 | 506.231228 | 5114.004041 | 9073.169884 | 4419.770505 | 0 | bg |

78329 rows × 7 columns

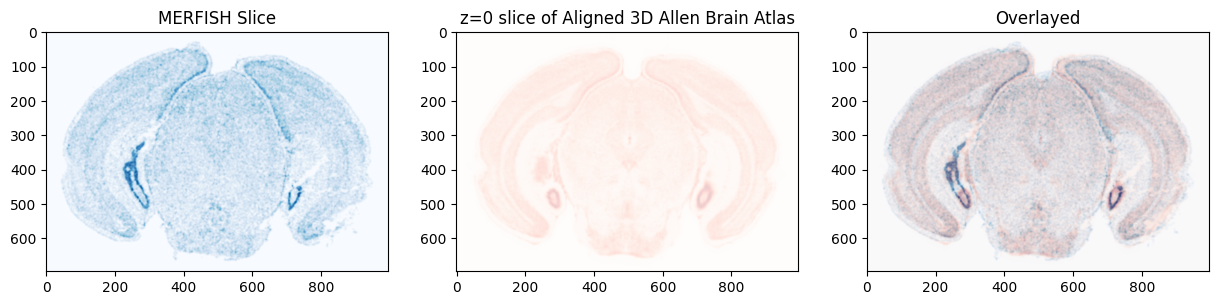

Now, we can explore our alignment and brain region annotations!

We can visualize the MERFISH slice overlayed with the matched section in the Allen Brain Atlas.

[20]:

It = torch.tensor(I,device='cpu',dtype=torch.float64)

AI = STalign.interp3D(xI,It,Xs.permute(3,0,1,2),padding_mode="border")

Ishow_source = ((AI-torch.amin(AI,(1,2,3))[...,None,None])/(torch.amax(AI,(1,2,3))-torch.amin(AI,(1,2,3)))[...,None,None,None]).permute(1,2,3,0).clone().detach().cpu()

Jt = torch.tensor(J,device='cpu',dtype=torch.float64)

Ishow_target = Jt.permute(1,2,0).cpu()/torch.max(Jt).item()

import matplotlib as mpl

fig,ax = plt.subplots(1,3, figsize=(15,5))

ax0 = ax[0].imshow(Ishow_target, cmap = mpl.cm.Blues,alpha=0.9)

ax[0].set_title('MERFISH Slice')

ax1 = ax[1].imshow(Ishow_source[0,:,:,0], cmap = mpl.cm.Reds,alpha=0.2)

ax[1].set_title('z=0 slice of Aligned 3D Allen Brain Atlas')

ax2 = ax[2].imshow(Ishow_target, cmap = mpl.cm.Blues,alpha=0.9)

ax2 = ax[2].imshow(Ishow_source[0,:,:,0], cmap = mpl.cm.Reds,alpha=0.3)

ax[2].set_title('Overlayed')

plt.show()

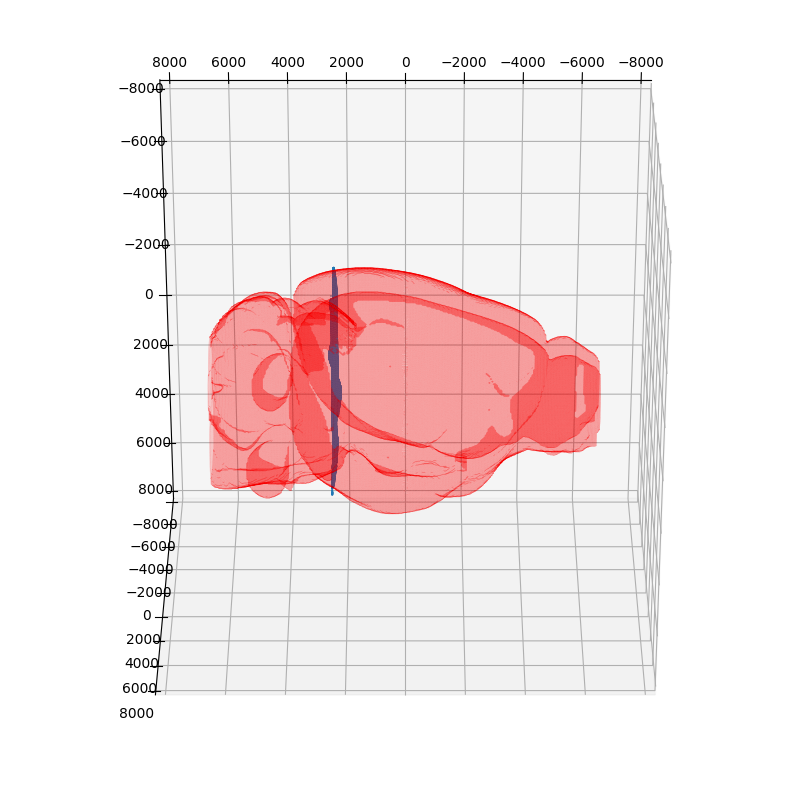

We can view the slice of the atlas that aligned to the target image. Note that the slice is curved with respect to the Allen Atlas, capturing the not truely coronal nature of the target slice.

[21]:

verts, faces, normals, values = skimage.measure.marching_cubes(vol>0,0.8,spacing = dxA)

verts = verts + np.array([x[0] for x in xA])

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(111, projection='3d')

mesh = Poly3DCollection(verts[faces])

#mesh.set_edgecolor('k')

mesh.set_facecolor('r')

mesh.set_alpha(0.2)

ax.add_collection3d(mesh)

ax.set_xlim(-8000, 8000) # a = 6 (times two for 2nd ellipsoid)

ax.set_ylim(-8000, 8000) # b = 10

ax.set_zlim(-8000, 8000) # c = 16

x = df['coord0']

y = df['coord1']

z = df['coord2']

#ax.grid(True)

#ax.set_xticks([])

#ax.set_yticks([])

#ax.set_zticks([])

#pos1 = ax.get_position()

#pos = [pos1.x0 +0.3, pos1.y0+0.3, pos1.width/2, pos1.height/2]

#ax.set_position(pos)

ax.scatter3D(x,y,z, s= 0.1)

ax.view_init(-240, 90)

#ax.view_init(-90, 120)

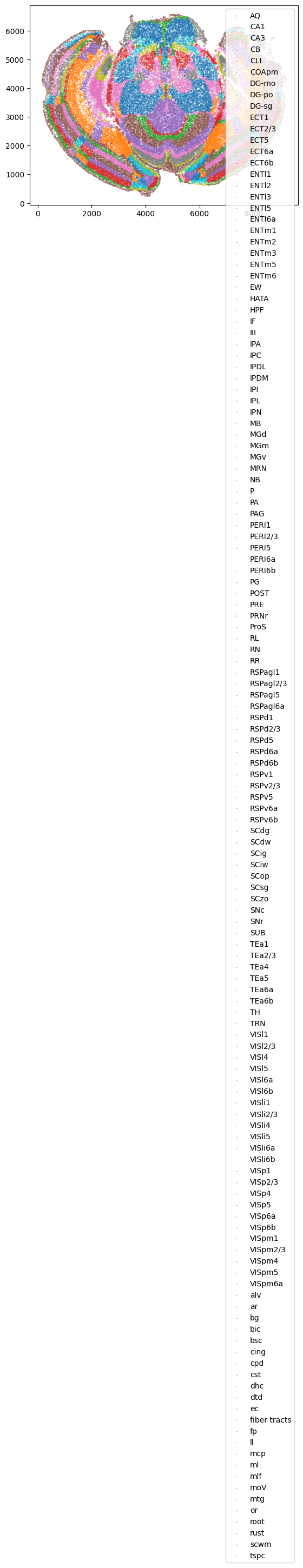

We can also plot all of the brain regions that are captured in the slice, as defined by our alignment.

[22]:

STalign.plot_brain_regions(df)

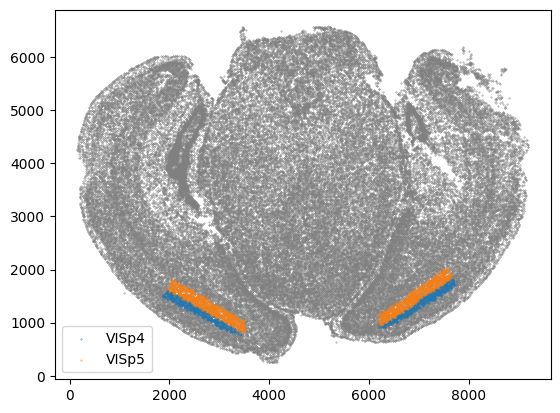

If we also interested in only a few regions, in this example let’s take VISp4 and VISp5, we can plot those regions using the following function.

[23]:

brain_regions = ['VISp4', 'VISp5']

STalign.plot_subset_brain_regions(df, brain_regions)

[ ]: